My agentic model at the end of June 2025

Some context on my agentic coding usage

It’s a little disorienting how fast things are changing in the agentic coding space. Even just using the words “agentic coding” is a shift that represents a new underlying model and framework for thinking about using llms for software development. “Vibe coding” just feels so passé May.

I realize I was starting to frame it as agents in My vibe coding process atm and The CLI LLM Agent Journey So Far but the process has certainly evolved. The original goal of multiple Claude Code CLI instances was context window management - I had noticed that too many different directions in a single context window just leads to mayhem. But the manual model became onerous to manage the markdown interactions between instances. And whereas before was thinking I would build an Autogen to do handoffs between instances as a way to reduce managing my communication load, instead I went in a different, better direction.

A couple of guys in the coding WhatsApp mentioned letting GPT Codex or Claude GitHub actions (GHA) trawl through GitHub issues. That sounded crazy to me and maybe solved my “messaging bus” issues. After exploring a bit and a bunch of trial and error (in the age of AI, trial and error meant about 4 hours), I realized that rather than developing an AutoGen and hand tooling the quality gates, I could just stick almost everything in GitHub. And when TDD or GitHub pre-commit workflows don’t catch all the quality issues, any other errors come out in the Vercel console during PR->main merges. (not being a trained developer I feel a little like I’m an imposter on another planet when I use terms like that; c’est la via).

That said, I have not gotten the pre-work and planning into GitHub. I wonder if that’s a good idea. Theoretically I could have a planning repo that builds/decomposes a Product Requirements Doc PRD into requirements (functional, architecture and technical) and then into prioritized, dependent and sequenced issues for work in GitHub, but I have not tried it; not sure I will. At the moment, I have a mini-autogen hand off process that takes the PRD and does the full decomposition to markdown. After Jesse suggested using Claude Code SDK and I started using it and starting getting better at using it, I may sunset the autogen and just use SDK - and SDK uses my Max subscription which is cheaper than using Autogen with API. That said, Autogen also allows me to challenge decomposition with different models. More on that below. Hmm.

Just a note - I’m mainly working on building (and “building feels more appropriate than “developing”) end-user web/app style products and app-to-app API connectors for workflow improvements. And a further note - all this process development effort is geared toward a single goal: operational readiness for our stealth custom software shop.

For the moment, here is my work process: I know it’s over-engineered and somewhat painful, not fully deterministic and fraught with problems. It does actually deliver functioning applications. shrug

- Idea dev: Use GPT o3 or Claude Opus, usually in a browser or app interface. Work out as much of the logic as possible. Copy and paste to a markdown file. I hate copy and paste, but here we are.

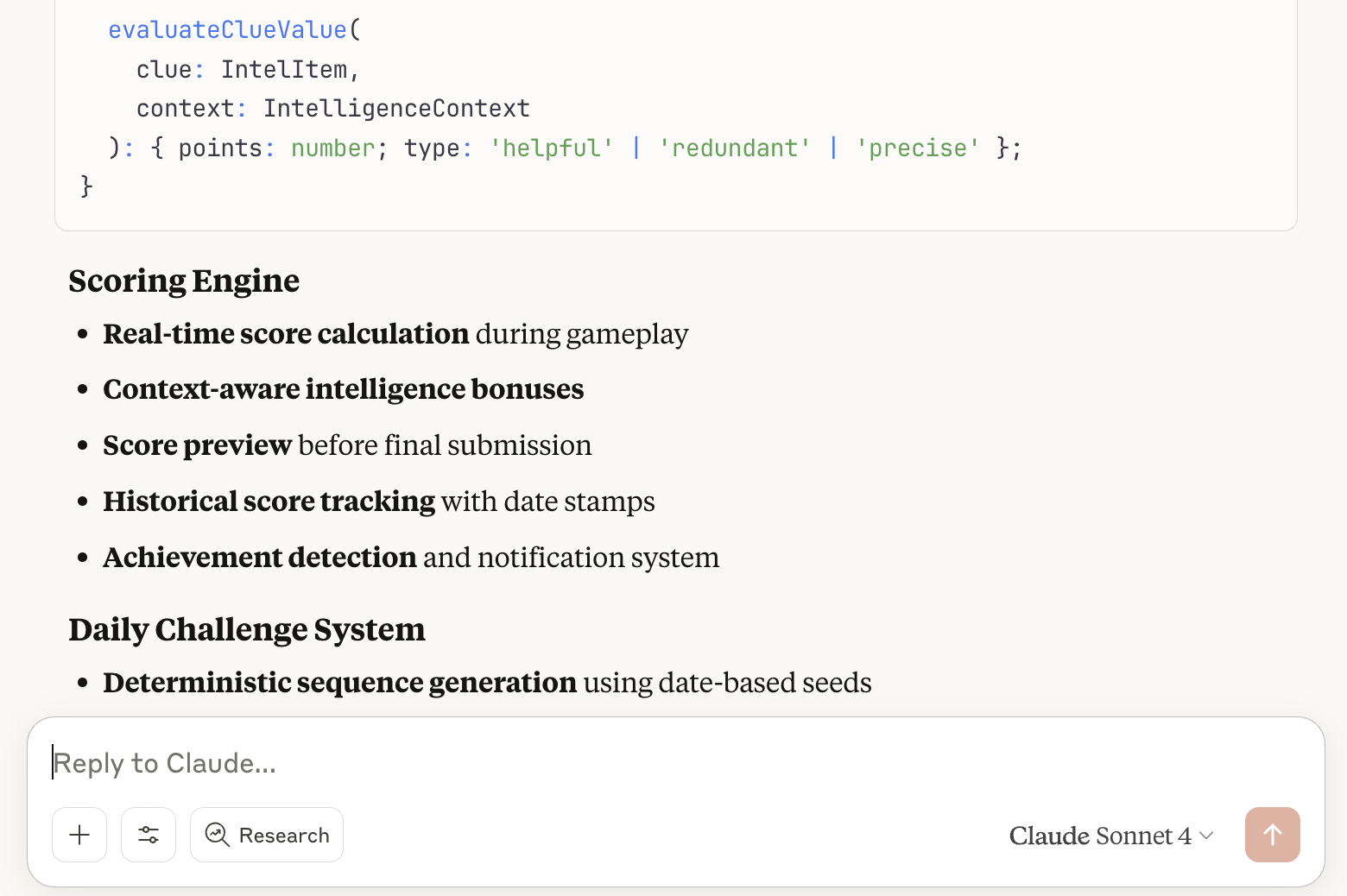

Generating a PRD (or at least a general app definition and requirements doc) using Claude desktop. I like to try to hash out workflow and logic here, not just definition of what it is, also how it works. This one is a two player dice game I came up with a few years ago.

Generating a PRD (or at least a general app definition and requirements doc) using Claude desktop. I like to try to hash out workflow and logic here, not just definition of what it is, also how it works. This one is a two player dice game I came up with a few years ago.

- Repo start: Initialize a local repo with my over-engineered Claude Structure. Create/connect a GitHub Repo. Initialize the GitHub repo with yml workflows and TDD/lint/pre-commit-hooks templates (templates Claude created - just a side note - often Claude claims it’s not following the TDD templates, but when I compare, they are exact. So, maybe it is or not; not sure?). The GHA workflows step is really important to get right and test that it’s working. Then Create a Vercel instance (if appropriate), add in Neon for Postgres (if needed). Drop the PRD into the project directory.

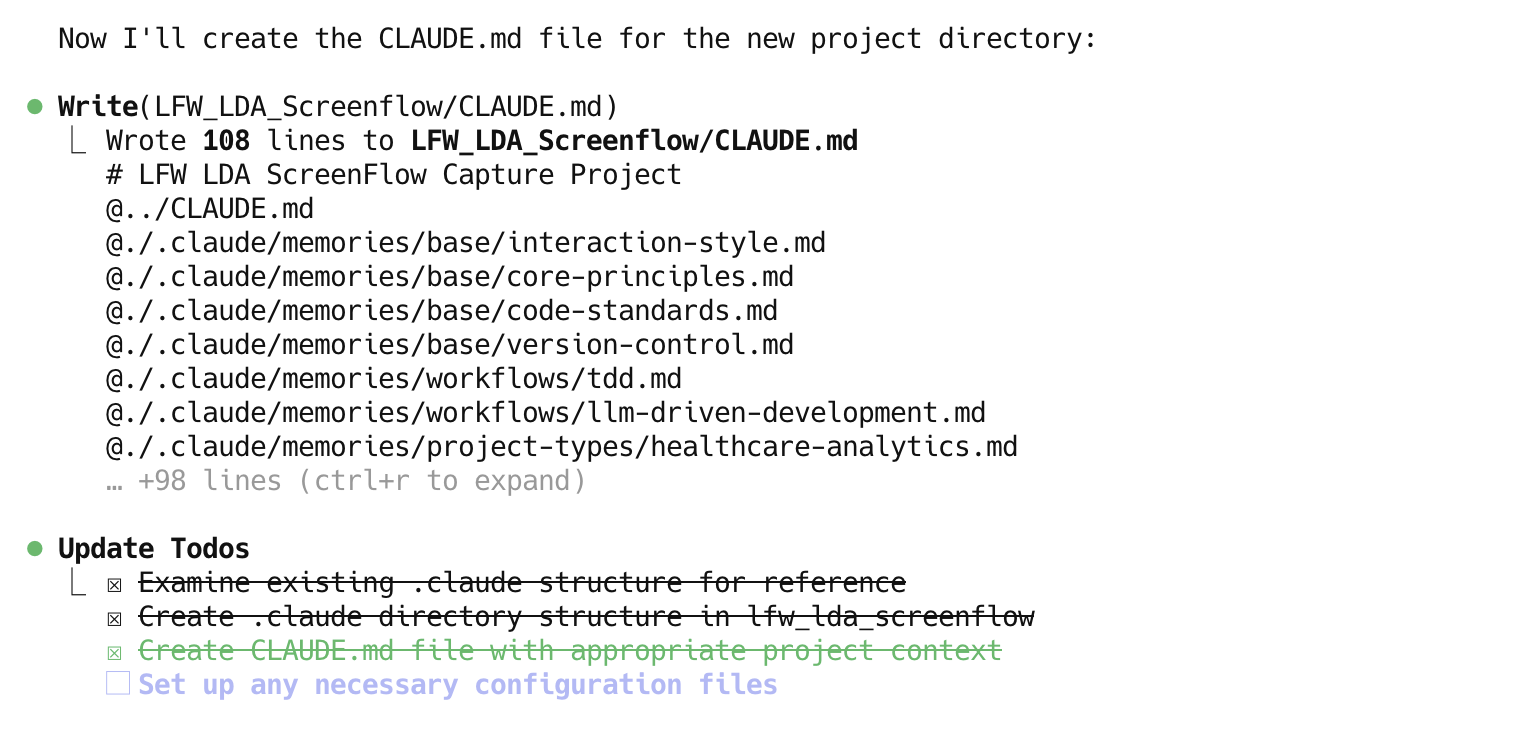

Initializing a local repo. This screenshot doesn’t pick up creating .git but that’s in there too.

Initializing a local repo. This screenshot doesn’t pick up creating .git but that’s in there too.

-

Requirements/Mock-up: This is where things are shifting as I write up this process: I had been doing mostly requirements dev but I’m recognizing the importance of doing mock ups before full requirements documentation. In my experience, buyers struggle to understand something until they see and touch a working prototype or mock up. Giving them a mockup is super important when building for end-users and aligning to their needs. It also helps with satisfaction, buying motivation and roadmap development. That said, you also have to be careful you don’t mock up something that is impossible to build within cost constraints.

- Requirements dev: Use Autogen to decompose the PRD into functional requirements, architecture requirements (with reference to our claude-imposed-tech-stack) and technical requirements; in that order. All output to markdown. Then I run it again (or Claude Code CLI) to challenge the requirements - what did we miss, what should we take out, what needs improvement. This is important since I find Claude really, really, really, really likes to over-engineer things. You have to explicitly tell it to watch out for over-engineering and to simplify. Of course, then it goes to too far. It’s a dance.

- I am starting to move to using Claude Code SDK for this process. I tell Claude Code CLI “ We are decomposing our PRD into requirements. Spawn N Claude Code SDK agents. One set should develop functional requirements. One set should create architecture requirements and one should create technical requirements. All agents need to adhere to our requirements templates and output to markdown. You are the manager, monitor for quality.” It’s not quite right yet. I am trying a more sequenced approach with an “inspector agent” that gets mad a the agents when they don’t behave correctly. More on that in a subsequent post.

- Mock up: Use v0 and/or Claude Code CLI in a sub-directory in the local repo.

- Once the mock up is where I want it, I use Claude Code CLI to review the notes and mock up to develop a combination of PRD and UX requirements, again all in markdown. Then go back to AutoGen for decomposition.

- Thinking of adding mermaid in but have not gotten there yet. Goal is a “visual language” that a LLM can understand in text that does not use multi-modal capabilities due to context window flooding.

- Unfortunately I am bad at remembering I need to use the TDD/linting/pre-commit hooks model even with basic HTML mockups. I get impatient and just want to try to mock up locally, but invariably, everything goes down the tubes about 2 hours into a mock up project. Does’t seem terrible, but when AI allows you to move a mach speed, 2 hours is forever. And a mess is just a mess and throws any new context window into a tizzy. Be smart Braydon, use the process.

- Once the mock up is where I want it, I use Claude Code CLI to review the notes and mock up to develop a combination of PRD and UX requirements, again all in markdown. Then go back to AutoGen for decomposition.

- Requirements dev: Use Autogen to decompose the PRD into functional requirements, architecture requirements (with reference to our claude-imposed-tech-stack) and technical requirements; in that order. All output to markdown. Then I run it again (or Claude Code CLI) to challenge the requirements - what did we miss, what should we take out, what needs improvement. This is important since I find Claude really, really, really, really likes to over-engineer things. You have to explicitly tell it to watch out for over-engineering and to simplify. Of course, then it goes to too far. It’s a dance.

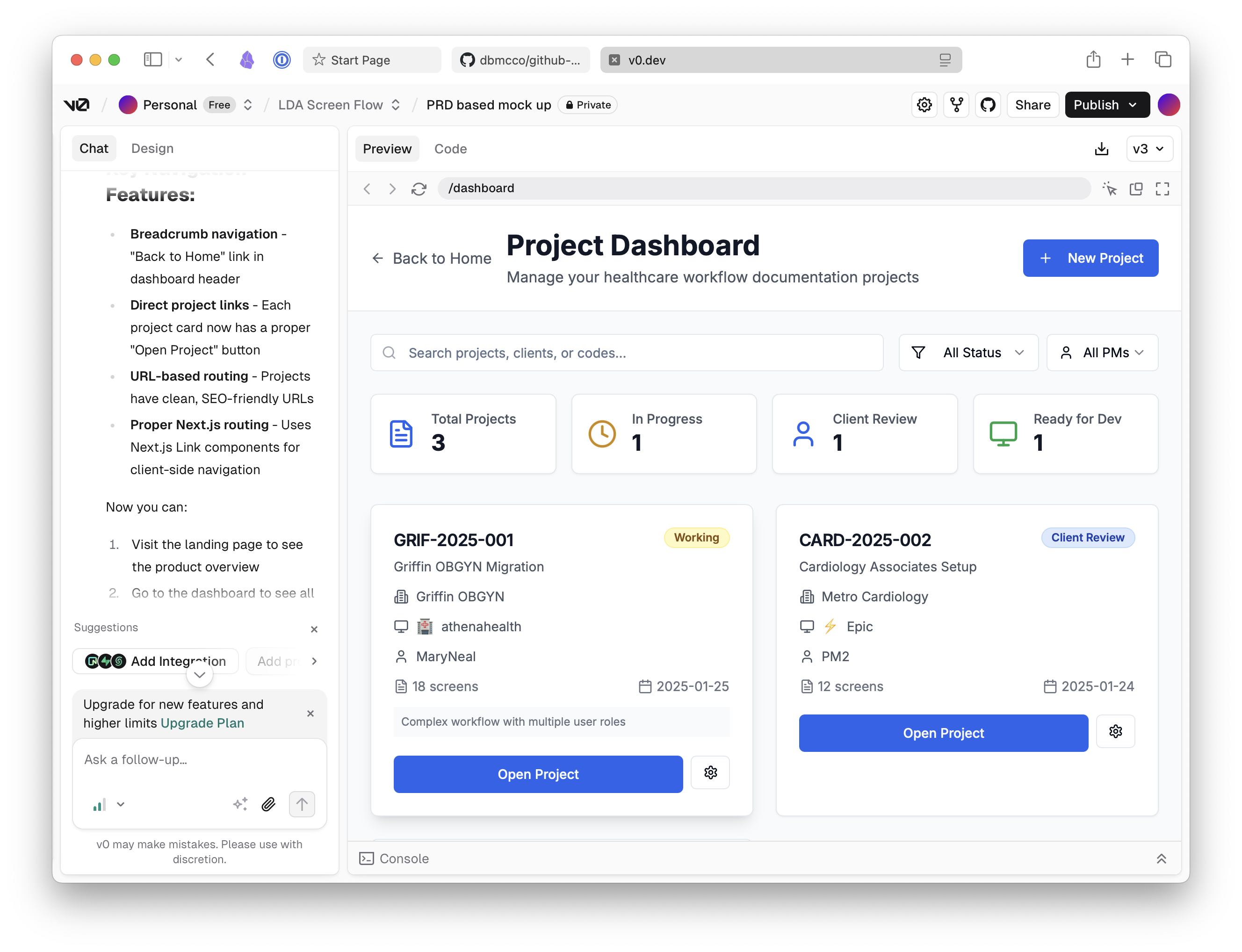

Experimenting with v0. I prefer Claude Code CLI for visibility but I am wondering if v0 has more controls built in and so reduces overhead for mockups.

Experimenting with v0. I prefer Claude Code CLI for visibility but I am wondering if v0 has more controls built in and so reduces overhead for mockups.

- Issues development: Work with Claude Code CLI to decompose issues. Unfortunately, from this stage on, my lack of coding experience and skills requires me to put tighter and tighter boxes around the AI to check itself since I can’t do that alone. Thus:

- I ask Claude to “review all foundational architecture and technical requirements, and create detailed issues for development using TDD/linting/pre-commit hooks and quality gates. Pay attention to and document dependencies. No issue should be larger than 15 minutes of LLM development time.” I have no idea if it knows how long it would take to develop something; this guidance is intended mostly to keep the issues super small. Then I have it challenge those again for over-engineering. Claude LOVES to over-engineer. It seems to help. I need to be more consistent in following my process.

- One thing I’m unsure about: I currently have TDD tests in the same issue as the development. I am not sure if I should break out the tests separately, or at least have the development in an issue comment. Another hmm.

- My work process here is not perfect or fully repeatable yet. Sometimes I forget to have it write to local markdown and just have it start pushing issues to GH. That’s dumb. Always follow the process Braydon.

- One thing I need to nail down - do I create ALL issues at one time or iteratively as the project progresses? Recently I experienced something I am calling “template drift” where the TDD template I had stopped working for the issues we were developing. I don’t know why. Maybe a context window issue? This issue implies a need for more iterative issue management, but I’m not sure.

-

Project Management Gap: I need a better project management model. Some of the guys create a markdown project plan doc that Claude CLI uses. I had hoped having the GitHub issues as my central planning space would suffice; it does not. I have recently started exploring using GitHub projects since it’s supposed to be fully integrated; it’s not - and more importantly Claude doesn’t seem to know what to do with it. May have to just use the markdown project file way of doing it - something similar had been working for me when I was manually controlling different Claude Code CLI instances.

- Total-ish AI Coding: At the command line I have Claude CLI begin to add @claude to issues for development. The yml workflows handle quality gates. I have it run as many in parallel as I can based on the dependencies. It’s fun to watch the GitHub console light up with the work going on. Caveats:

- I do have to add a little human intelligence here since it will do things like think that since the DataBase architecture is different than the middleware it can do both at the same time. Which in principle this is possible, I find things just don’t wire up well if you let it all run too far down the path. And I have not figured out how to keep the two sets of issues in sync. Claude CLI does not seem thoughtful enough to handle that type of inter-dependent management. Or maybe it’s just me.

- Since Claude CLI can’t listen for events, to keep it rolling on issues, I tell it to “monitor ongoing issues with sleep every 90 seconds. Once the issue is complete, review for quality; if passed, PR and start the next issue. Merge once Epic is ready”. 90 seconds seems to be a sweet spot since Claude appears to have a 2-minute timeout waiting for anything to run. It will eventually give up after N cycles (not sure if this is intentional or not). This way of working is hit or miss for completion. I am open to any ideas on how to keep Claude monitoring and running on multiple, sequenced issues. Cron job? Tmux (I don’t think I can get this to work since Claude is a CLI app, not actually the CLI and I don’t trust Claude to spit out headless Claude with appropriate instructions, although that it cool in principle. Ugh.

- Even with TDD and instructions for linting and pre-commit hooks, @Claude GHA messes up and the issues break. Claude CLI has to instruct @claude GHA to go and fix it.

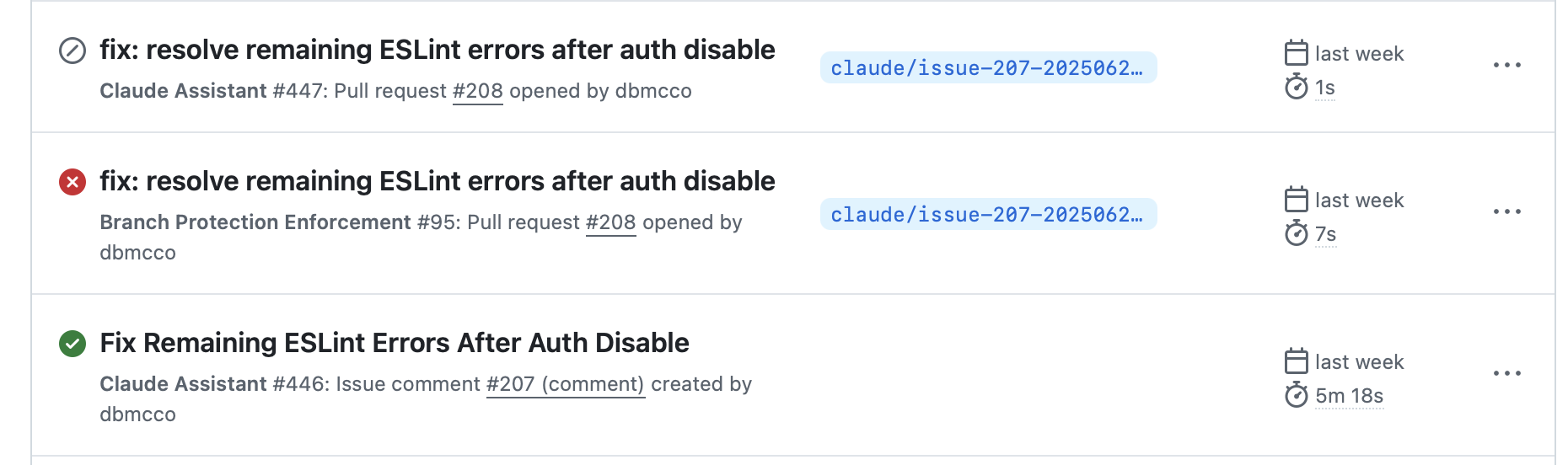

Watching Claude GHA work through actions is satisfying until it’s not. GHA totally wrecked Google Authentication integration and I had to do a massive roll back. Then something in the context got further wrecked and now I have a mess on my hands.

Watching Claude GHA work through actions is satisfying until it’s not. GHA totally wrecked Google Authentication integration and I had to do a massive roll back. Then something in the context got further wrecked and now I have a mess on my hands.

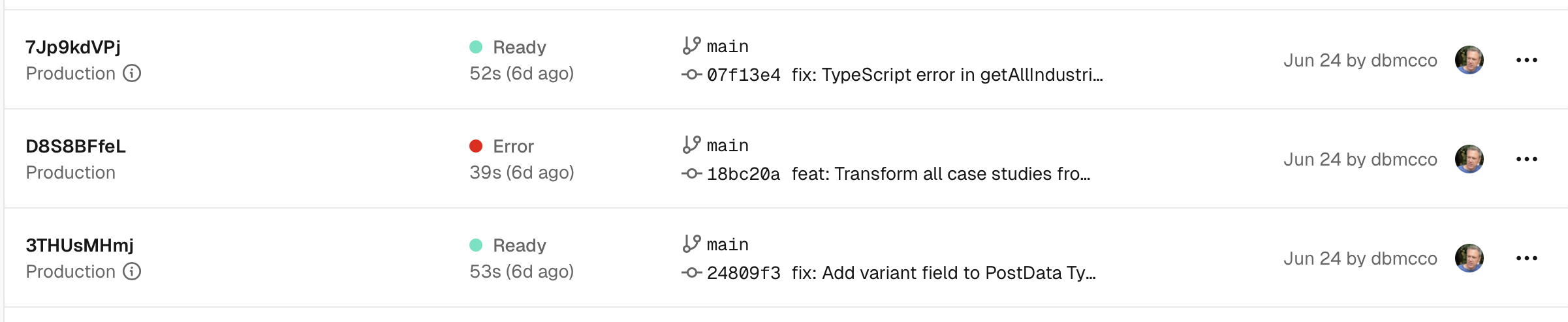

- Pushing to “production:” When Claude PR and merges to main, Vercel automatically picks up the commit and deploys either to a preview or production. I think if we PR to a branch, it is a preview, if it’s to main it’s a merge. I have not looked closely to see what my config is - need to do that. If something doesn’t work in the Vercel deploy, I can either manually copy the logs to the Claude CLI to add a comment to the appropriate issue for correction, or I can ask Claude to monitor the Vercel logs for errors. I’ve started to refrain from doing this since it adds mess to the context window.

Vercel deployments from GitHub is super slick and the logging console is a last line of defense against quality issues.

Vercel deployments from GitHub is super slick and the logging console is a last line of defense against quality issues.

Some other notes on context window management.

Context windows are the boon and bane of LLM development. Based on how LLMs work, full context gets passed at each turn. This seems dumb to me, but it’s an inherent limitation to how these tools function at the moment. Thus, keeping an ultra-clean and tight context window is proving to be critical. But balancing that with what you need for project and process specifics is tough.

I am using the recursive Claude memory capability with modular @ calls in projects and for parent directories. It sometimes loads everything and sometimes does not. If it does, that loads too much into the context window. I have not gone into each of my /.claude files to see if they are consistent and are what is causing variability, but will. I also instruct Claude to “update your claude.md project memory with where we are and what is next”. This has been my mechanism for ensuring consistently between context switches or compacts. But unless I go in an prune the Claude file, it can get unwieldy with status updates (wow does Claude like to congratulate itself how well it’s doing). This slop messes up the context window.

If Claude gets into a troubleshooting loop, this is a death spiral for context window management. I will stop and start a new interim window for analysis troubleshooting, and when that’s complete, start a fresh context window to get development back on track. GitHub actions has far less of this, but sometimes spins out of control.

And, if Jesse’s LACE CLI has its way, a LOT of these issues will go away. Get it done!